AI Musings

Hey there. Here’s a big update on a bunch of shit about AI.

So, I’ll cap off the last week’s worth of stuff before I start to describe my first ventures into the project. I’ll split it into two blog posts so that y’all don’t get confused. Alright, so that I can avoid this later in the next post about Radio.dat, a big issue with extracting text from the Japanese version is the call-specific Kanji characters.

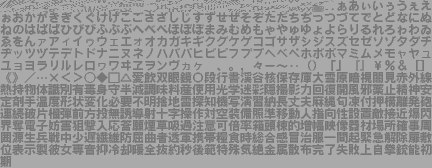

At first, you can figure out that the hex codes signifying each character are two bytes, where they correspond to a lookup chart for all the hiragana and katakana, as well as some additional characters. Here’s a picture of the actual font map that Green_Goblin pulled from the game:

It took a good chunk of time to figure out how hiragana and katakana were arranged, which was sort of in the same order as SHIFT-JIS (though apart from a few places the game does NOT use that encoding). You could map it offset to hiragana. But Katakana follows a different order in shift-jis so an offset didn’t work, but I did stare at it for 10 minutes and realize that it was ordered the same way as hiragana but then offset further up in value.

Then, another user WantedThing shared his list of characters extracted from the Integral PC version. Looking at it, it seemed like though there were some mis OKsing, theyu followed a bit of the order of this font table, so I started to fill in the gaps and voila. We had those down.

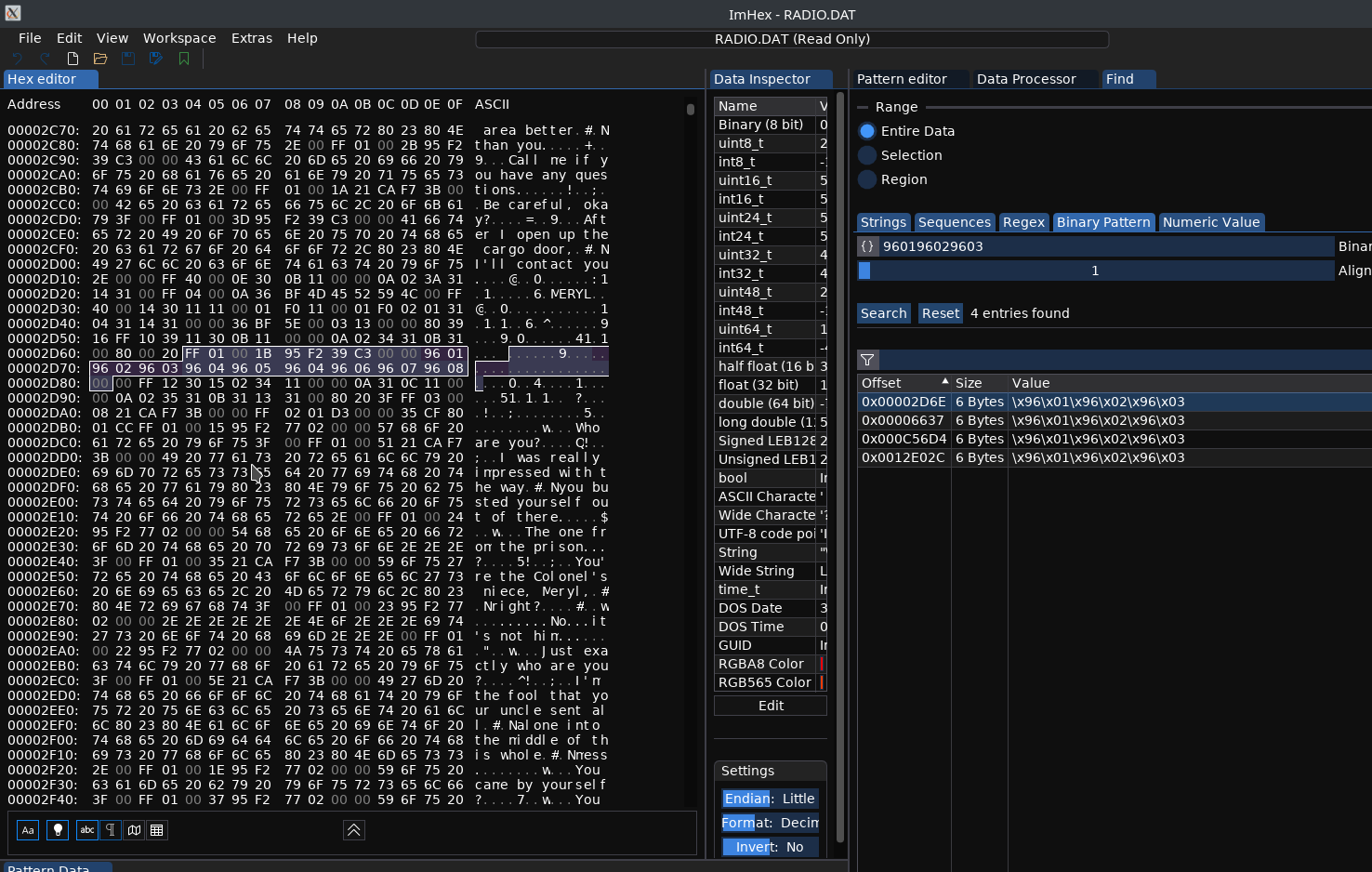

But then there was this range of characters in the 0x9600range that just didnt match anything. Most of the font was in 0x90, 0x80 range. So what were these in the 0x9600 range?

Variable characters

Well, character data is stored after every call. So this doesn’t usually happen in the US version, but it does in one specific place.

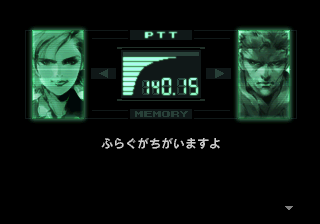

What you’re looking at is a dialogue subtitle where we’re printing Japanese Kanji, but where are these?

At the end of the call is where you find a bunch of graphics data, and each 36 bytes here is a 12x12 font character for a Kanji that is only really used once.

So, in the story of me figuring out Radio.dat, these were junk data throwing off my readings, since I knew each call started with a frequency in two bytes. 0x36bf translates to 14015, which is Meryl, so we can see the next call starts after that highlighted block. So what do these represent here?

Well… really nothing in the US version. These actually aren’t Kanji, but since the US version doesn’t have Japanese in its normal font set, its the characters to essentially say “The flag is wrong”. This call isn’t supposed to be seen, so it has a developer note (this is mentioned in the cutting room floor).

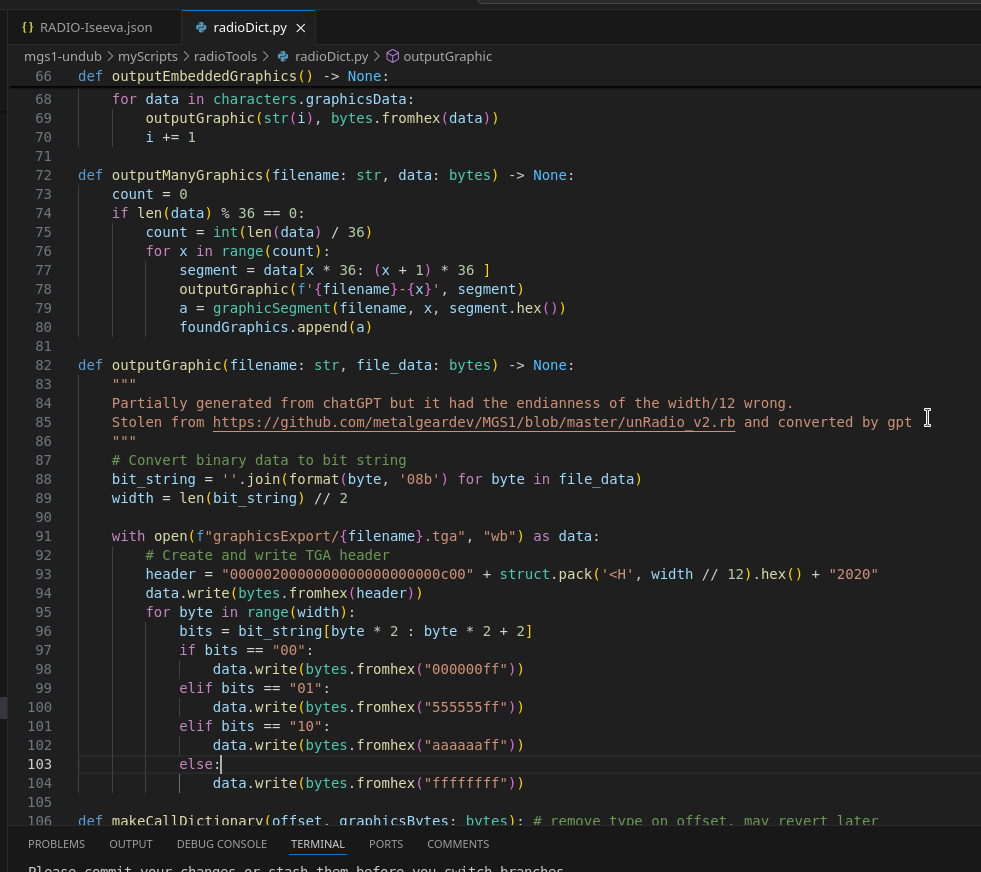

So what I ended up having to do to translate these was actually make a custom dictionary for every call i decode. It’s a massive pain in the ass but here’s how it works:

- When we get to a Radio call, we use the length to determine where the graphic data starts.

- We then grab the graphics data in 36-byte chunks until the next chunk starts with something in the codec range (basically hex for 14000 to 14300, it’s larger because there are some mistakes in the code).

- Those are sent over to the dictionary to return essentially a custom dict only used for that call that represent

0x9601through… however many characters there are.

Basically each character is represented by two bytes. But then, anything in the 0x9601 or greater range are the custom characters, and they basically are represented as 0x96 with the second byte being the index of the graphic following the call.

The easiest way to translate these, I figured, was we have a dict that took in the bytes and returned the character.

So I figured that’s not too hard, how many can there be? I modified my script to output all of the graphics data and it turned out to be like 700. :| You can output them all and then use a line deduplicator to find all the unique ones and its still a lot.

(Later I realized it’s far more. Integral has its Staff commentary radio calls, and those are heavy in not only developers names, but also the technical terms they use to describe their portions of the game. The final count is well over 1100!)

The matching problem

So that’s all well and good, you say, you can find the characters and then just put them back in based on their byte data. Easy right? Well… no. It’s hard. Like… really fucking difficult.

Basically it starts with this. Kanji are really complicated to draw and yet we’re only rendering them with 12 x 12 pixel squares. So it’s really hard to mask them against what they actually are given the low resolution. We tried OCR and actually goblin went pretty far into matching all of the characters which was great. The only problem is there were many that he couldn’t find and many that were wrong.

This is a problem. We never really found a solution for this up until… probably the last couple of weeks. I was essentially going through line by line each time there was a character missing and trying to find the equivalent line in the game script from the masters collection, if you set the language to Japanese, you can get the screenplay book in Japanese rather than English. Even though the .json format that it uses is really bizarre. It has several sets of essentially HTML instruction laying out dialogue in different places, different keys represent voices and then some represent the titles. Some represent the script descriptions like actions and some actually represent dialogue. Imagine rendering three or four webpages all at once that combined make a script or screenplay book. So needless to say these weren’t in any good shape to actually search through the dialogue was at least all in one place so I could search for a specific passages but really what I ended up doing was just out putting it all as best I could to separate things and then just pulled from it as needed.

I work for the last few weeks as I have already completed. Most of the extraction scripts was to go through line by line. Find the nearest act acre point that I could to find the original script at that portion of the game and then start to line by line figure out which characters were whi they didn’t have anything to do with the graphics data so I do still worry there were some mismatches, but at this point, what can you do?

So the other night I had this bout of insomnia and I’m laying in bed thinking about the project of course and I start to think this is a language thing, right? So why not use a language model to figure out what’s going on?

Couldn’t we just train an LLM on the original game script, and say “What dialogue is this closest to?”

So again, I’m hesitant to ask ChatGPT to just write code for me, but I’ve done so in a few different places where I felt like I just didn’t wanna do it myself and I preferred to just quickly either make a tool or test something out to see if it’s viable before I really dig in and build something.

For example, the original character data decoder was written by someone named SecaProject. I have a few things to credit to him in my project, but one of them is I (shamelessly) ripped off his code from github.

Anyway, I didn’t know Ruby, so I took the Ruby script and fed it into ChatGPT and said hey convert this to Python and what do you know he did. She did? Whatever gender the AI is… It did the work for me of converting a language I didn’t know.

With that we can now put the characters but they’re so tiny. We can’t OCR them. I was going through call after call and doing some manual OCR where context would help but it was still proving to be a really tedious process and I figured there’s gotta be a better way. So the other night I decided maybe it was worth asking if there’s a way to have a language model look at this and figure it out.

Can AI really take my job? Let’s find out.

So the other night I took to ChatGPT, and I asked it to figure out an algorithm that we could use to identify all of the matching lines in the script based on the missing characters and lo and behold we had just a bit of success, and for a brief minute, I thought this was really gonna work.

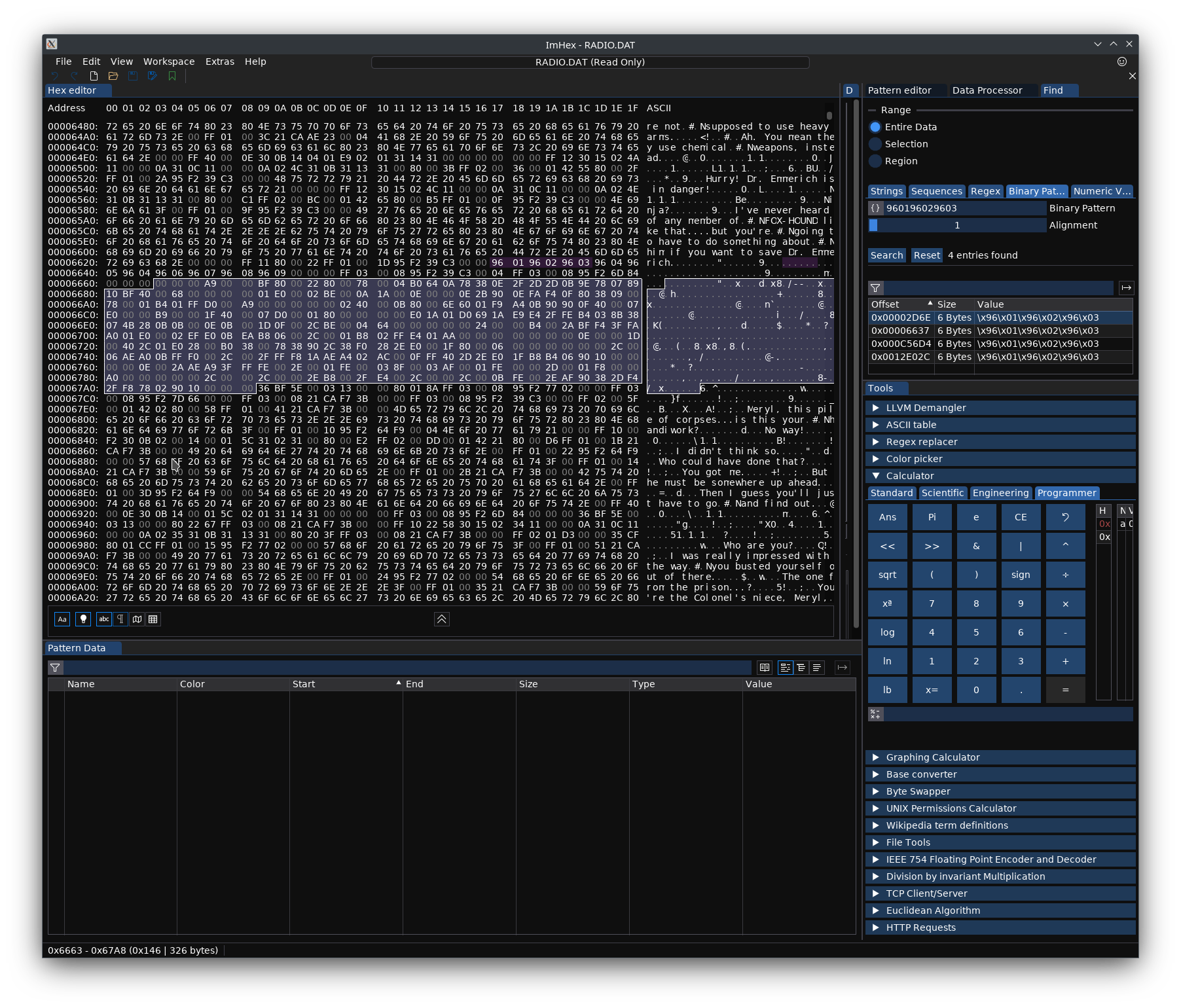

A quick snippet of what it was doing in the terminal:

Extracted Line: []とかセンサーをよけて[]んで。

Best Match Found: 何とかセンサーをよけて進んで

Does this match? (y/n): y

✅ Confirmed mappings:

0a6aa90ebffe2d00383caa38bcff383ceb382ceb382cff382c00382c01b82c00f4000000 → 何

24b4a078fae82afffc02fae469bae4bcbff82cbae42cbffc2eaaa8bbfffd20aaa8000000 → 進

-------------------------

Extracted Line: チャフを使えば[][]の電子装置をだませると[]う。

Best Match Found: チャフを使えば戦車の電子装置をだませると思う

Does this match? (y/n): y

✅ Confirmed mappings:

7728ec3bb8ee2ab8e93ffffe3baee83baced3ffcf82ba8b4bffcf96baafe038268000000 → 戦

2aaea83ffffc1aaea42ffff82eaeb82eaeb82ffff81aaea4bffffe6aaea9002c00000000 → 車

2ffff82eaeb82eaeb82eaeb82eaeb82ffff80198042ade282ec4ad7aeaed21ffd4000000 → 思

-------------------------

Extracted Line: すごいわ、スネーク![][]を[]したのね!

Best Match Found: 詳しいのね?

Does this match? (y/n): n

Here’s a montage of 40 minutes of character identification:

Honestly, I was so stoked that this was working that I took to the discord and went on a tirade about how amazing this was. Unfortunately, I ran into some other problems along the way you could see some manual edits that I had to do here in the video. One of the problems was even though we could find the equivalent line in the script matching would get off and it would pick the wrong characters. There are some other weird eccentricities in the script. When you extracted with the codec lines, there are some codes for furigana. There are also other things we had to take out like line breaks, but then I was also concerned that I wasn’t looking at the graphics because when I was doing the script replacement already I didn’t look at the actual graphics so I had it displaying the graphics for me. You can see them popping up on the screen rendered in their 12 x 12 glory. This gives you an idea of how difficult it is to OCR them. You can get a bit better with context, but even so a lot of times they just don’t look anything like the character because it’s so low Rez.

So I started to run into a few problems:

- The first issue was that it wasn’t always matching the correct characters.

- I also was thinking that I could keep adding the characters to the list and then re-extract the codec dialogue and then go back through with more success. The problem became every time I started it started with the same order so I was tapping through a bunch of non-matches.

- The only ones that really match accurately were shorter sentences.

Eventually, I still had enough doubt in the script that I figured it might be better to refine it a bit more. I threw other ideas at the wall and eventually I tried a couple other matching algorithms, but they started to drift away from actually using an LLM to determine what the correct script was. I think in the end it’s going to cause more of a headache trying to figure out something that will identify the characters correctly, so it’s much better if I just do it manually again at the very least I have some tools built now that will either find the original dialogue so I can go to that place in the script and figure it out. I could also make a small script that would quickly display the character so that I know more or less what I’m matching I think would also just became very unhealed. These as I was adding all of these new codes to the Dictionary, but I wasn’t exactly replacing them from all that had already been ma.

I think in the future, maybe it would be more possible to just get something that would proofread and try to match the dialogue once all characters are at least filled in. Then maybe it could point out some places where either the game screenplay doesn’t exactly match the dialogue we extract or it might actually be able to come up with some places where characters are mismatched either way I figure it’s a little bit too unwieldy to work with right now. We’ll have to revisit this later.

I’m a little bummed just because this is the part of the project I really dislike. Matching the characters is a very tedious operation. Even those 40 minutes you see time lapse in the clip above just took so much out of my brain that I was very tired and pretty much passed out right after. Granted it was almost the end of the night anyway, but it was just a lot of manual work that wasn’t the most fun.

Anyway, for anyone interest in LLMs, here’s a link to the full conversation I had with it over the course of the last two days: https://chatgpt.com/share/67b964a2-683c-8001-b565-3fc10f54872c

I’m not gonna say it was a complete waste of time, though I have some new scripts that might shed some light on which dialogue I’m actually looking at. It’s also never a waste of time to try something even if it doesn’t work out and it was impressive to match about 150 characters in the span of about 40 minutes. Some of this also helped me figure out some other problems I’ve been running into with virtual environments in Python and flatpak fuckery (because of running VS code via flatpak).

Is this the end of LLM work?

Not by a longshot is this anywhere near over. I do feel like there’s probably some merit in having it go back and check the dialogue lines once they’re finished to see if it matches there are more possibilities. I was thinking of such as that context would matter in making matches so if it could read a whole conversation, then it would probably understand more of what specific line we were trying to correct. It would be a fun project, but would definitely distract from my overall goal at this point.

Either way it’s gonna be back to the translation efforts for me soon. Part of the other thing making this a little tedious right now is my current effort was looking at each call per given stage and translating those I came up with an interesting way to increase my productivity a little bit, but even so a better way to do that would’ve involved having the characters completely translated before doing this.

Anyway, that’s all for me for tonight. This is the first time I’m using an actual dictation to write some of this blog post rather than typing it all out. Hopefully it’s all making sense. I don’t really have time to go back and edit it, but I guess I’ll feed it through yet another LLM and see if it all makes sense. I did spend a little time getting fabric set up on my PC so hopefully that will allow me to or quickly work with some of the large text reviewing I need.

That’s all for now next time I’ll actually go over a bunch more about starting with radio.dat, and the struggles and evolutions I ran into. That will more accurately start to put the history of this project into focus.

PS, What’s Fabric?

Here’s the output I got from fabric’s summarization:

It looks like you've had a big update on AI-related topics! Let me summarize what I can gather from your post:

1. You experimented with using Large Language Models (LLMs) to match characters in an old game's dialogue. While it showed promise, the process wasn't entirely accurate and had some limitations.

2. The LLM was able to correctly identify about 150 characters in a span of 40 minutes, which is impressive. However, there were issues with matching the correct characters, and the process became tedious due to the need for manual corrections.

3. You're not giving up on using LLMs, as you see potential benefits in having them review dialogue lines once they're completed. You also think that having context would improve their ability to make accurate matches.

4. Your current focus is shifting back to translation efforts, which might involve finding ways to increase your productivity and streamlining the process.

Some other notable points from your post include:

* You've been exploring virtual environments in Python and dealing with issues related to flatpak fuckery (whatever that means!).

* You're looking into using Fabric, a tool for automating tasks, to help with large text reviewing.

* Your blog post was written using dictation software, which is cool!

Overall, it seems like you're exploring the possibilities of AI and Large Language Models in your project, while also acknowledging the limitations and challenges that come with using these tools. You're not giving up, though, and are excited to continue experimenting and learning!

Seems legit.